AI and hallucinations

A bit of humor as it inflates my qualifications, but it is also a red flag that progress has stalled, and just tossing more resources (compute and GPU) at the problem is unlikely to fix the problem.

I know I am treading on hazardous ground whenever I write about AI (particularly the Generative AI, or Large Language Models (LLMs)), because I see a flurry of people unsubscribing from my newsletter.

But so be it.

Here’s the deal though, you cannot ignore the flaws. A recent incident has brought that to the forefront.

It is no secret that I am a product manager, and I work at Cisco, an IT hardware and software company. My team builds training and certifications to support our principal products and technology.

Recently, we launched a training to cover the latest iteration of the Catalyst Center, a “controller” software that provides some cool network configuration, maintenance, and configuration optimization. But that is irrelevant for this.

To support this launch, our marketing team asked me to draft a blog post to be queued up, and about 2 months ago I drafted it. Alas, yesterday it went live.

If you want to read about it you can find it here: Discover the Cisco Catalyst Center Fundamental (CCFND) Training Program.

It is not my best writing, but it is the usual corporate pablum.

No, the tie to AI is not in this vanilla blog post, but in something shared by a colleague who saw it on his Facebook feed. Now, I am not on Facebook, so I can’t find this stuff, but I have people who can’t help but to look up what I do.

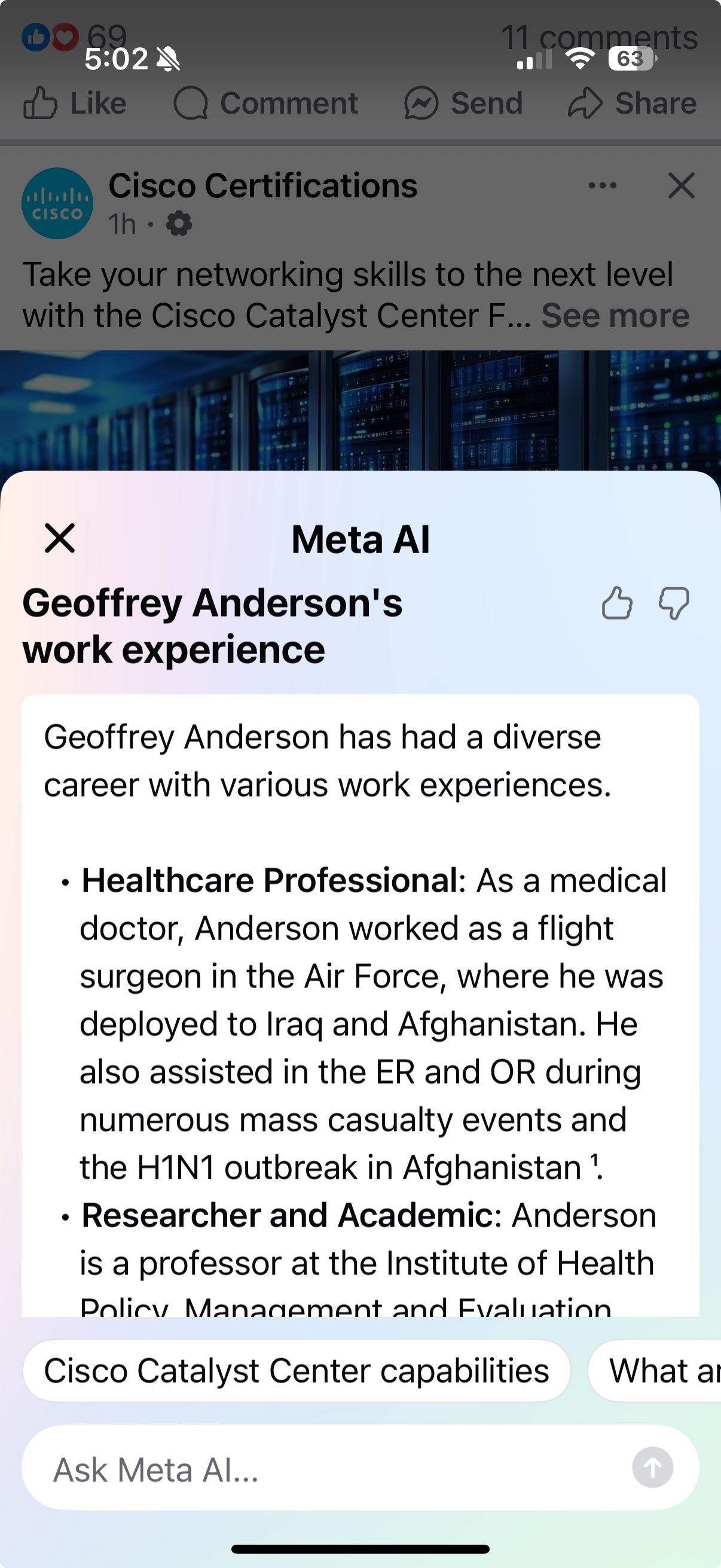

Anyways, this person used the Facebook “about” feature to learn more, and that invokes their AI (Meta AI), and it summarized my background. When this was shared with me, I about fell out of my chair:

Needless to say, NONE of this is accurate1. But I did forward this to my boss, especially the bit about me being a professor at the Institute of Health Policy Management and Evaluation. Because if that is my job, I certainly deserve more money.

This is clearly an example of AI Hallucination. That is, where it fucking dreams up shit, and presents it authoritatively, and at times comically.

I mean, this is a blog post about Information Technology training, and if I was going to make shit up about me, I would say something like 25 years in IT Design, deployment and operational excellence, not something completely orthogonal like medicine with a history of duty in Afghanistan and Iraq.

I mean, that is totally fucking bonkers.

The real problem though is that since ChatGPT burst out in fall of 2022, these LLMs have had a problem with outputting garbage.

Sure, it can sound authoritative and even include citations. But it is still bullshit.

The problem

Since their initial public launch, there have been several waves of ever bigger models, and lately the big bet by all these companies is on “chain of reasoning” to supposedly improve accuracy, and show the steps taken by the inference model.

OpenAI just launched two new versions of their o series chain of reasoning models, the o3 and o4-mini, which they apparently spent damn near a billion dollars to train, and the results?

Not so good. In fact, the early reporting is that these two chain of reasoning models are more prone to make shit up and even faking the steps in the chain of reasoning. From Tech Crunch: “OpenAI’s new Reasoning AI models hallucinate more”, it seems that even OpenAI is acknowledging that the o3 and o4-mini hallucinate more than the earlier models:

Hallucinations have proven to be one of the biggest and most difficult problems to solve in AI, impacting even today’s best-performing systems. Historically, each new model has improved slightly in the hallucination department, hallucinating less than its predecessor. But that doesn’t seem to be the case for o3 and o4-mini.

According to OpenAI’s internal tests, o3 and o4-mini, which are so-called reasoning models, hallucinate more often than the company’s previous reasoning models — o1, o1-mini, and o3-mini — as well as OpenAI’s traditional, “non-reasoning” models, such as GPT-4o.

Perhaps more concerning, the ChatGPT maker doesn’t really know why it’s happening.

Oops, I guess the nearly $5B you spent last year didn’t really move the needle?

Still, you have to wonder if this technology is hitting a wall (a lot of skeptics are beginning to say that the scaling wall is here, and just tossing more compute and GPU resources isn’t going to lead to any significant breakthroughs).

From their own internal testing OpenAI’s results are surprising (that they admit this at all fascinates me too):

OpenAI found that o3 hallucinated in response to 33% of questions on PersonQA, the company’s in-house benchmark for measuring the accuracy of a model’s knowledge about people. That’s roughly double the hallucination rate of OpenAI’s previous reasoning models, o1 and o3-mini, which scored 16% and 14.8%, respectively. O4-mini did even worse on PersonQA — hallucinating 48% of the time.

Look, if I had an employee who made shit up one third of the time, I would put them on a PIP, and be looking to manage them out. But OpenAI? They are happy to sell subscriptions for $30 a month, or $200 a month for their “pro” tier2 even if the product is spitting out garbage.

That gets back to the essential point: The current crop of LLMs are not intelligent. They do not think. They use fancy mathematics and statistics to predict what might come next in a stream of output, but the tech pundit class (Casey Newton, Kevin Roose, and others) are all in on them being the path to AGI, the “whole human replacement” AI.

You might have heard that “Agentic” AI is the next big thing. That is the wrapping of LLM’s into “agents” that can do tasks.

Think that you can tell your “agent” to book a meeting with people, and it will access their calendars, look for times where they are free that align to your schedule, book the meeting, send the invite, reserve a room, and add in the teleconference information.

But that shit just doesn’t work today. At least Apple has the decency to tell you that it can get shit wrong, but the others are plowing forward full speed ahead.

No, I am not worried about my job being subsumed by this non-thinking pile of silicon. Perhaps in the future true general-purpose intelligence will be bestowed upon the machines, but the current trajectory is beginning to look like a dead end.

Until an AI agent can function as an entry level employee without a ton of intervention, the workforce threat remains remote. And from what I am seeing, we are pretty fucking far from that minimal level of acceptable performance.

Alas, that is not going to stop the executive class from replacing flesh and blood workers with AI in an effort to save costs.

My history: I worked my way through college cooking, and post graduation, I entered the tech world as a chemical technician supporting wet etch and plating processes, then I was a process engineer, from there I jumped to applications engineer and manager, then to product management. In product management, I have worked in Semiconductor capital equipment, networking hardware, industrial measurement and test, enterprise communications software (Fax Servers), then nano-technology, before landing where I am at in adult technical education.

I need to point out that OpenAI has ~ 500M monthly average users, but the bulk of them are free users, but the paid accounts are much smaller, and all evidence is that even at the $200 a month subscription they lose money on each user. This is not a healthy business with a plan to become profitable (they claim that once Stargate comes online in 2030 that they will be net profitable - and I’ll be dating Jessica Alba by then too)

Thank you Doctor Anderson for your service. Had to get that in but thank you for all you do.

I guess Acid AI needs an acid bath.

When AI destroys someone life hopefully we can sue the shit out the companies that put this crap on the market.

I had an odd experience a few days ago. I was going to use the word "effluvium" in a comment and doubled checked the meaning via Google, looking to confirm my recollection of the word being a descriptor of a bad smell.

The result was identified as an AI response and claimed that the word effluvium referred to a potential, partial or complete loss of hair, then gave an entire backstory of the word that was wholy inaccurate. WTF?

Of course when I checked just now looking to copy and paste that AI hallucination here in this comment, it wasn't there anymore.